A Dystopian Realm of Gender Shades

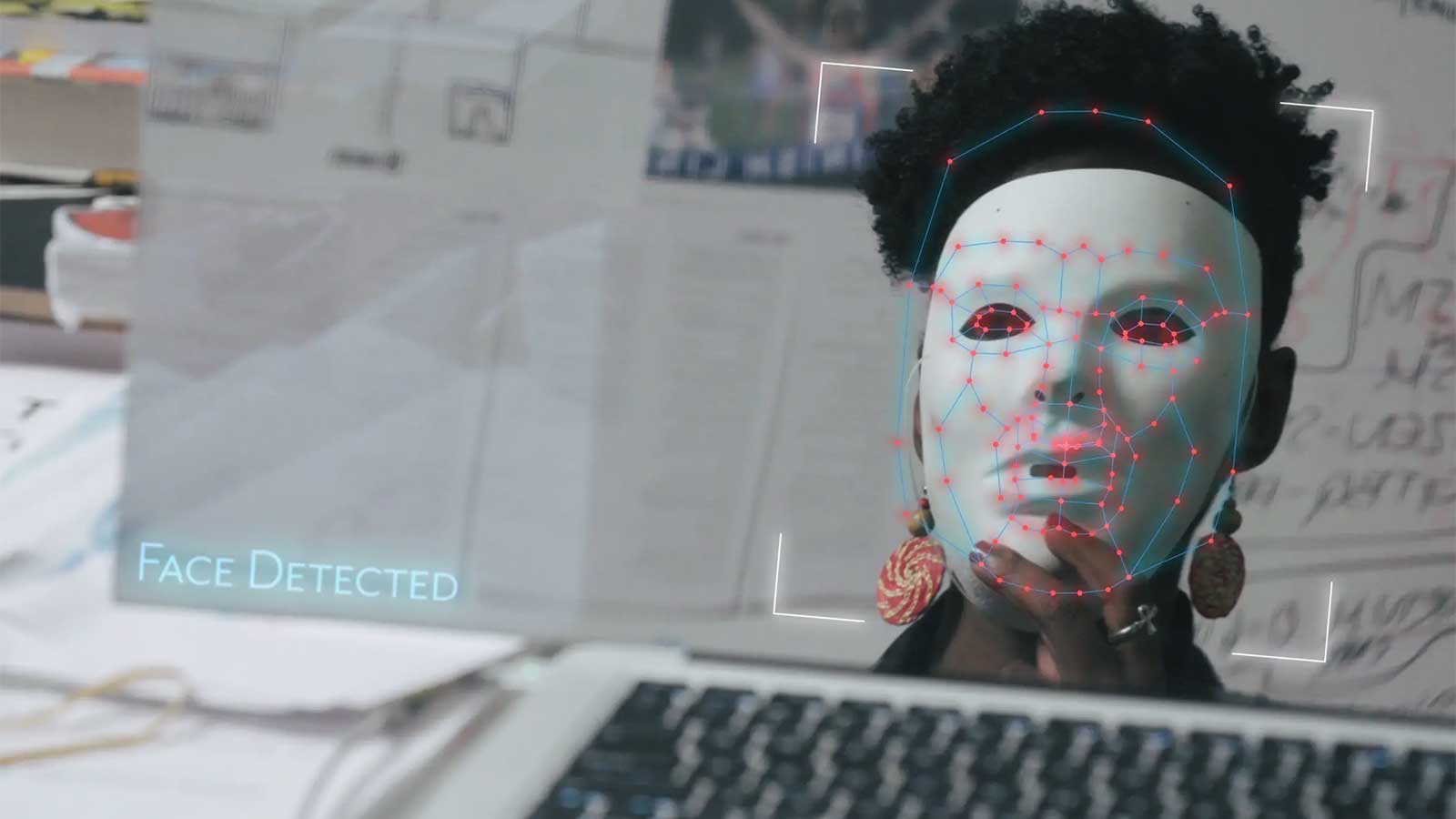

Buolamwini’s 2018 Gender Shades project at MIT Media Lab documented how facial recognition technologies from Amazon, Microsoft, and other companies consistently failed to recognize the faces of women and people of color, especially those like her, who were “highly melanated,” as she puts it. As she explains at the 2018 Wikimania Cape Town conference in South Africa, even IBM, which had the most egalitarian algorithm of the time, had recognized white men’s faces with 99.7% accuracy and Black women’s faces with a dismal 65.3% accuracy. After Amazon criticized Gender Shades in a blog post, around 80 A.I. researchers, such as the famous Canadian computer scientist Yoshua Bengio, endorsed its conclusions. Since Buolamwini’s 2018 study, companies have worked to reduce disparities in their algorithms’ accuracy. Nonetheless, the Department of Homeland Security’s February 2019 study of 11 facial recognition technologies found persistent inequities with regards to race, gender, and age.

The databases that train algorithms tend to reflect the demographics of their largely white and male programmers. In one scene, Buolamwini examines a database, flitting through picture after picture of blandly smiling politicians in suits. The overrepresentation of white men in training datasets and Black people in criminal databases make a toxic combination, leading to the racial and gender bias in police algorithms. The ACLU made this point vividly in 2018 when it tested Amazon’s Rekognition technology, meant to identify criminals, on members of the House and Senate. The program made 28 false matches that included “six members of the Congressional Black Caucus, among them civil rights legend, Representative John Lewis (D-Ga.).”

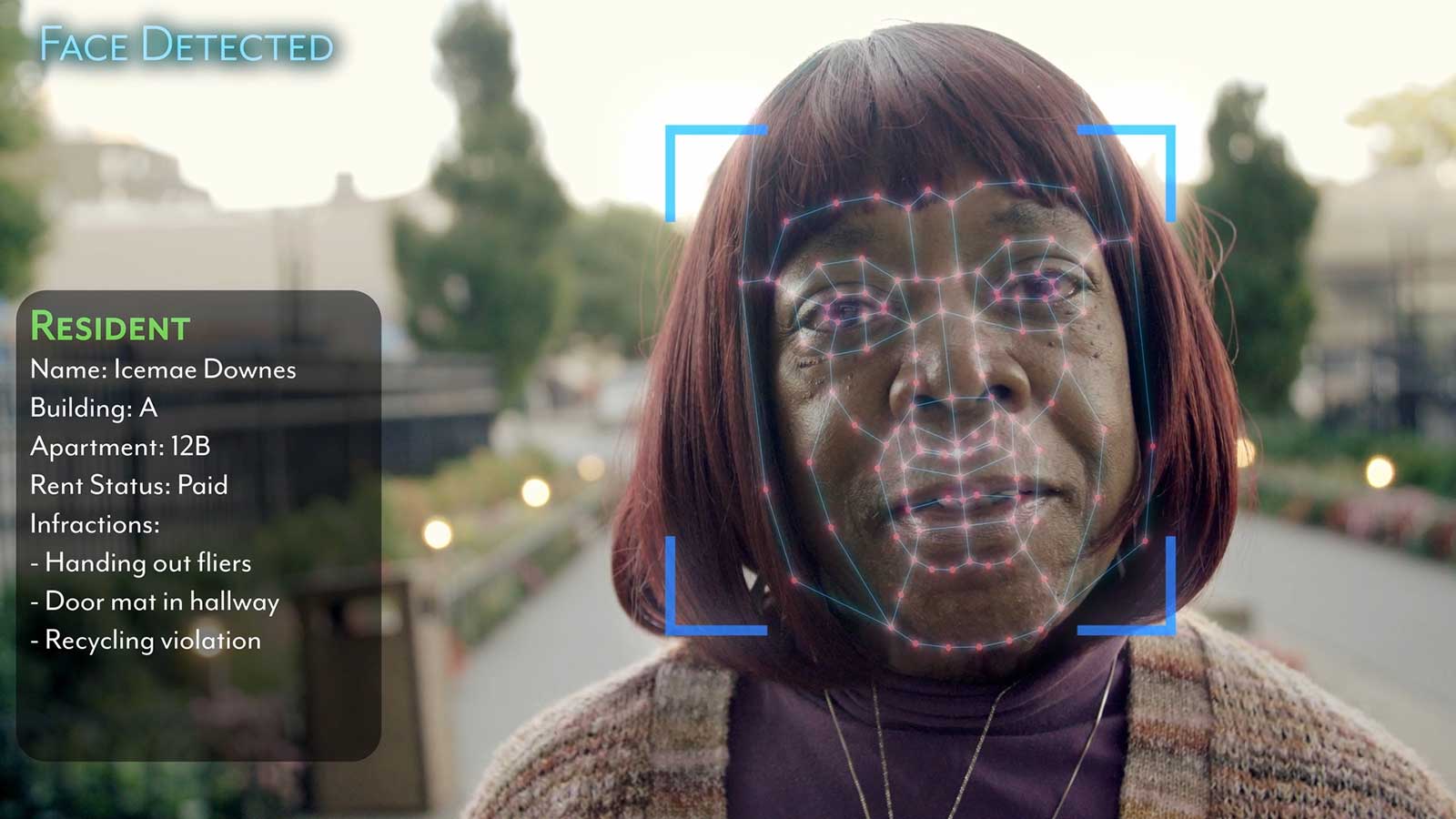

While facial recognition programs used by police are often faulty, they have also become more pervasive in many cities. Several scenes in the film profile the U.K. non-profit Big Brother Watch, which opposes the use of facial recognition to identify suspects. In one tense scene, several police officers fine a Londoner for instinctively covering his face while walking past a CCTV camera. This motif of citizen-police clashes recurs later in the film when plainclothes officers search a Black schoolboy whose face supposedly matches one in their criminal database. They are mistaken, but the shaken boy doesn’t receive an apology. Similar incidents surely recur in London every day, reminding viewers that American police don’t have a monopoly on racial profiling.

In the U.S., Amazon technologies have updated police surveillance for the 21st century. The megacorporation’s taxpayer-funded contracts with police departments across the country amount to old-school repression with a shinier gloss. As Buolamwini points out, the databases include information on over 117 million Americans, even though it remains illegal for law enforcement to harvest citizens’ fingerprints or DNA without their consent. A CCTV camera snapping someone’s photo at an intersection may not be overtly invasive, but it ultimately props up the same system of mass incarceration of people of color. Coded Bias points out that new technologies could make the gains of the Civil Rights Movement even more vulnerable.

Evolving Policies Around Facial Recognition Technology

Increasingly, governments and even companies themselves are recognizing facial recognition’s role in racial profiling. Criticism of this technology reached a fever pitch during the protests over the murder of George Floyd in May 2020; two weeks later, IBM — one of the biggest providers of such technology — promised to end its sales and research of facial recognition software and opposes the use of such technology for mass surveillance and racial profiling. Amazon, meanwhile, announced that it would halt its contracts with police departments for one year. 250 Microsoft employees also signed a letter opposing the company’s contracts with law enforcement — but critics feature that IBM’s departure runs the risk of leaving the industry reliant on less accurate software.

While Buolamwini applauds the existing developments, she wants more companies to take action. She endorses the Safe Face Pledge, which she says “prohibits lethal use of the technology, lawless police use, and requires transparency in any government use.” Governmental counterpressure has been even more dramatic. San Francisco, Oakland, and Cambridge have all prohibited law enforcement’s use of the technology, and in December 2020, the entire state of Massachusetts moved to ban police from using facial recognition technology.

Coded Bias also highlights how society tacitly consents to corporate surveillance through targeted ads and data mining. At its most sinister, online ads for casinos, payday lenders, for-profit colleges, and other moneysucks disproportionately bombard the poor and the vulnerable. Virginia Eubanks, author of Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor, points out in the film that sci-fi author William Gibson’s prediction that, “The future is already here – it’s just not very evenly distributed,” works in both directions. Tech that makes people’s lives more convenient is adopted by wealthy people first, but tech that exploits and monitors is foisted on poor people first.

To speculate on where all of this might lead, Coded Bias points to the “social credit system” of China, a country with the U.S.’s level of hyper-development of A.I., but without its democratic norms and separation of powers. For Buolamwini, the situations of other countries show us “potential futures.” While Coded Bias documents reasons for concern, it does not succumb to hopelessness. The film ends with a congressional hearing on facial recognition technology featuring Buolamwini’s testimony. In a rare example of bipartisanship, the firebrand leftist Alexandria Ocasio-Cortez and the Trump ally Jim Jordan both express concern over mass surveillance.

In October 2020, Congress released an extensive report outlining potential antitrust concerns in regards to Big Tech. Nonetheless, congressional testimony has only tackled a few of activists’ myriad concerns. As the MIT Technology Review has argued, “The report’s main recommendations would do very little to solve real social problems caused by technology, like misinformation and election interference, because these problems aren’t related to competition.”

A high-tech film about high-tech injustices, Coded Bias ends with something decidedly more analog: people talking in a room together, enacting a tradition hundreds of years old. A.I. may have beat us at chess, but it will never be able to swear an oath.

Ω

Coded Bias Film Trailer

Joy Buolamwini